Artificial intelligence can compose video game music of the future

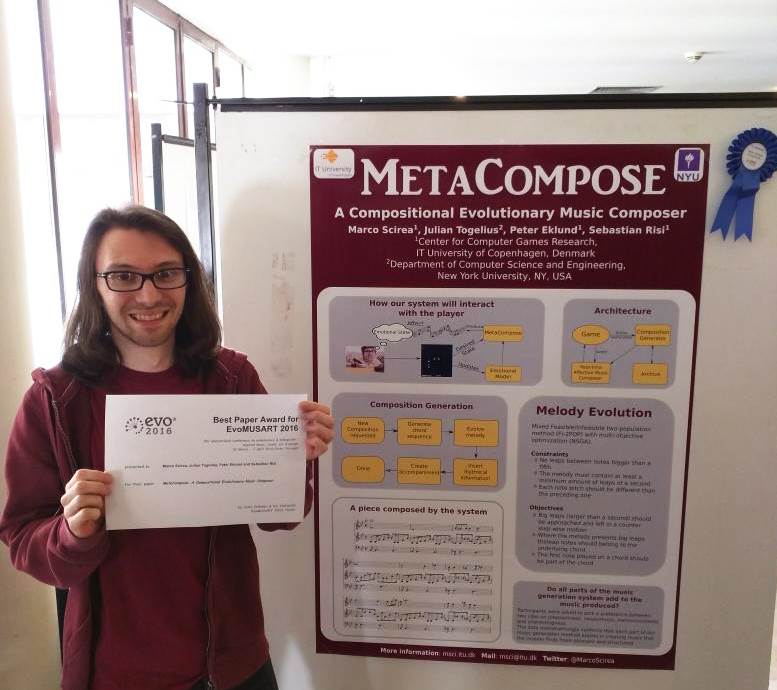

Marco Scirea, a PhD student at the IT University of Copenhagen, won the best paper award at the EvoMUSART conference for his research on music composition using artificial intelligence.

Computer Science DepartmentResearchartificial intelligence

Written 7 April, 2016 10:14 by Vibeke Arildsen

Can artificial intelligence create music that is harmonious, pleasant and interesting to human ears? The answer is yes, according to Marco Scirea, a PhD student at ITU and author of the winning paper at EvoMUSART (International Conference on Evolutionary and Biologically Inspired Music, Sound, Art and Design).

Marco Scirea took home the best paper award from the EvoMUSART conference in Porto.This discovery might be good news for game developers and players alike, says Marco Scirea, who is currently developing MetaCompose, a music generator for use in video games.

Marco Scirea took home the best paper award from the EvoMUSART conference in Porto.This discovery might be good news for game developers and players alike, says Marco Scirea, who is currently developing MetaCompose, a music generator for use in video games.

“MetaCompose produces music clips in real-time that reflect the actions and mood of the game as well as the emotional state of the player. If we can express moods through music, we can reflect the player's emotional state, reinforce it, or try to manipulate it. The goal of our research is to provide the player with a better experience and developers with a tool that can guide user experience through music,” Marco Scirea explains. He expects to finish his project next summer.

“Currently we're working on integrating MetaCompose with a game to allow us to develop the emotional model of the player and finally study the effects of the generated music on the player's experience. I think the best paper award shows that my research is not just niche, but something that can create repercussions in the field of computational creativity for art, games, and user experience.”

Marco Scirea’s PhD project is supervised by Peter Eklund and Sebastian Risi (IT University of Copenhagen) and Julian Togelius (New York University). The paper can be downloaded here.

Read more about MetaCompose and listen to its music compositions here.

Vibeke Arildsen, Press Officer, phone 2555 0447, email viar@itu.dk